Introduction

In the last decade, few technologies have had as transformative an impact on the world as Deep Learning. From powering voice assistants like Siri and Alexa to enabling self-driving cars, medical diagnostics, and content recommendation engines, deep learning has become the beating heart of modern artificial intelligence (AI).

Deep learning is not a new concept — it builds upon decades of research in neural networks and computational learning theory. What makes it revolutionary today is the combination of big data, massive computational power, and advanced algorithms, which allow machines to process information, recognize patterns, and make decisions with unprecedented accuracy.

This article explores the foundations of deep learning, its working principles, key architectures, applications, advantages, challenges, and its role in shaping the future of AI and human innovation.

What is Deep Learning?

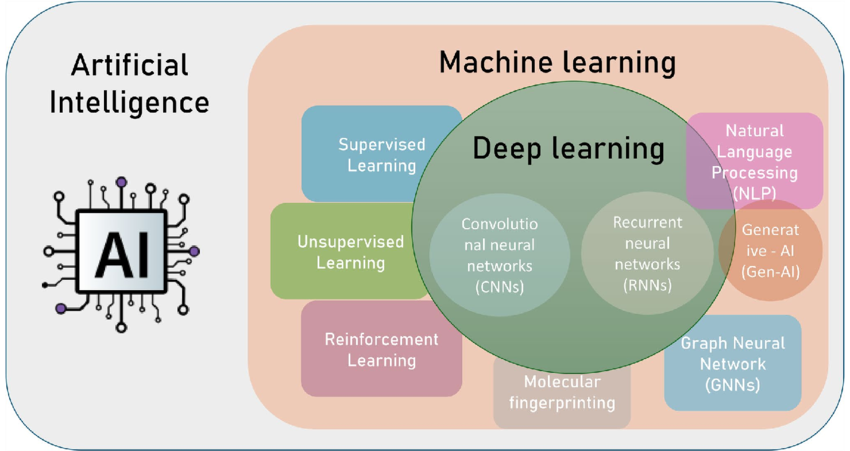

Deep Learning (DL) is a subset of Machine Learning (ML) that uses artificial neural networks (ANNs) with multiple layers — hence the term “deep.”

While traditional ML algorithms often rely on feature extraction performed by humans, deep learning models automatically learn hierarchical feature representations from raw data. These models are inspired by the human brain, consisting of interconnected layers of nodes (neurons) that process and transform data inputs to produce meaningful outputs.

In simple terms:

- Machine learning learns from data.

- Deep learning learns to learn from data — identifying patterns, relationships, and abstractions with minimal human intervention.

The Evolution of Deep Learning

The journey of deep learning spans over half a century:

- 1940s–1950s: The Birth of Neural Networks

- The concept of the “neuron” was introduced by Warren McCulloch and Walter Pitts in 1943.

- Frank Rosenblatt’s Perceptron (1958) was the first algorithm capable of learning weights for classification tasks.

- 1970s–1980s: The AI Winter and Backpropagation

- Neural networks faced skepticism due to limited computational power and poor results.

- The rediscovery of the backpropagation algorithm in the 1980s by Rumelhart, Hinton, and Williams reignited interest in multilayer networks.

- 2000s–2010s: The Deep Learning Revolution

- The availability of GPUs, large datasets, and improved algorithms sparked a new wave of progress.

- Breakthroughs like AlexNet (2012), which won the ImageNet competition, demonstrated deep learning’s potential in image recognition.

- 2020s: Deep Learning Everywhere

- Today, deep learning drives innovations in natural language processing (NLP), autonomous systems, generative AI, and more.

How Deep Learning Works

At its core, deep learning involves training artificial neural networks to recognize patterns in data. Let’s break down the basic workflow:

1. Input Layer

This layer receives the raw data — such as images, text, or audio. Each input node corresponds to one feature or pixel.

2. Hidden Layers

Multiple hidden layers perform complex mathematical transformations on the data. Each neuron computes a weighted sum of its inputs, applies an activation function, and passes the result to the next layer.

Deeper networks can capture more abstract and higher-level features.

3. Output Layer

The final layer produces the desired output — for example, classifying an image as a “cat” or “dog,” predicting a number, or generating text.

4. Training Process

Training involves feeding data into the network and comparing predictions to actual labels using a loss function.

The model then adjusts its weights using backpropagation and gradient descent to minimize the error. Over many iterations, the model “learns” the patterns in data.

Key Concepts in Deep Learning

1. Artificial Neural Networks (ANNs)

The fundamental building block of deep learning, ANNs simulate how neurons in the brain transmit and process information.

2. Activation Functions

These introduce non-linearity, enabling networks to model complex relationships. Common functions include:

- Sigmoid

- ReLU (Rectified Linear Unit)

- Tanh

- Softmax

3. Loss Functions

They measure how well the model performs. Examples:

- Mean Squared Error (MSE) for regression

- Cross-Entropy Loss for classification

4. Optimization Algorithms

Used to update model weights efficiently:

- Gradient Descent

- Adam Optimizer

- RMSprop

5. Overfitting and Regularization

Deep models can overfit — memorizing data instead of generalizing. Techniques like dropout, L2 regularization, and data augmentation help mitigate this issue.

Popular Deep Learning Architectures

1. Convolutional Neural Networks (CNNs)

- Specialized for image and video analysis.

- Use convolutional layers to automatically extract spatial features.

- Applications: facial recognition, medical imaging, self-driving car vision systems.

2. Recurrent Neural Networks (RNNs)

- Designed for sequential data like text or time series.

- Can remember previous inputs using internal memory.

- Variants like LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit) improve long-term memory.

3. Generative Adversarial Networks (GANs)

- Consist of two networks — a Generator and a Discriminator — competing against each other.

- Capable of generating realistic images, music, and text.

- Used in creative industries and data synthesis.

4. Autoencoders

- Unsupervised models that learn to compress and reconstruct data.

- Useful for dimensionality reduction, anomaly detection, and data denoising.

5. Transformers

- The backbone of modern natural language processing (NLP).

- Use self-attention mechanisms to capture relationships between words or tokens.

- Power models like GPT, BERT, T5, and LLaMA — revolutionizing language understanding and generation.

Applications of Deep Learning

Deep learning has permeated almost every sector of modern life. Some of the most impactful applications include:

1. Computer Vision

- Facial Recognition: Used in security systems, social media tagging, and smartphones.

- Medical Imaging: Assists doctors in detecting tumors or anomalies from X-rays and MRIs.

- Autonomous Vehicles: Enables real-time object detection and navigation.

2. Natural Language Processing (NLP)

- Powers chatbots, translation systems, and virtual assistants.

- Deep learning models understand syntax, semantics, and context — enabling more natural human-computer interactions.

3. Speech Recognition

- Converts spoken language into text (used in Google Assistant, Siri, Alexa).

- Improves accessibility and voice-driven user interfaces.

4. Finance and Business Analytics

- Detects fraud, predicts market trends, and optimizes credit scoring.

- Automates customer support with intelligent chatbots.

5. Healthcare

- Predicts diseases, assists in drug discovery, and personalizes patient care.

- AI-driven diagnostics are becoming more accurate than traditional methods in some domains.

6. Manufacturing and Robotics

- Enables predictive maintenance, quality control, and intelligent automation.

- Robots equipped with vision and learning capabilities can adapt to changing environments.

7. Entertainment and Media

- Recommendation systems (Netflix, YouTube, Spotify).

- Deepfake generation, content creation, and video editing automation.

Advantages of Deep Learning

- High Accuracy:

Deep networks often outperform traditional ML models on complex tasks such as image and speech recognition. - Automated Feature Extraction:

Reduces the need for manual feature engineering — the model learns relevant features by itself. - Scalability:

Performance improves as more data becomes available, making deep learning ideal for big data environments. - Versatility:

Applicable across domains — from natural language and images to audio and sensor data. - Continuous Learning:

With techniques like transfer learning and reinforcement learning, models can adapt to new tasks with minimal retraining.

Challenges and Limitations

Despite its success, deep learning faces several challenges:

- Data Requirements:

Deep learning models require vast amounts of labeled data for training, which can be expensive and time-consuming to collect. - Computational Costs:

Training large neural networks demands high-performance GPUs or TPUs, consuming significant energy and resources. - Explainability:

Deep models often function as “black boxes,” making it difficult to understand how they make decisions. - Bias and Fairness:

Models can inherit and amplify biases present in training data, leading to ethical concerns. - Overfitting:

Without proper regularization, models may perform well on training data but fail in real-world applications. - Security Threats:

Vulnerable to adversarial attacks — small perturbations in input data can mislead models.

Recent Advances in Deep Learning

1. Transfer Learning

Models pre-trained on large datasets can be fine-tuned for specific tasks, reducing training time and data requirements.

2. Federated Learning

Allows training across decentralized devices (like smartphones) without sharing private data — enhancing privacy.

3. Explainable AI (XAI)

Efforts to make deep models interpretable and transparent are gaining traction in regulated sectors like healthcare and finance.

4. Multimodal Learning

Integrating multiple types of data (text, image, audio) to build more holistic AI systems.

5. Generative AI

Deep learning is powering creativity — from art and music generation to language models like GPT-5, which can generate human-like text and reasoning.

The Future of Deep Learning

The next phase of deep learning will be marked by efficiency, transparency, and collaboration between humans and AI. Some emerging trends include:

- Smaller, Smarter Models:

Techniques like model pruning and quantization will make deep learning models more efficient and deployable on edge devices. - Neuromorphic Computing:

Hardware inspired by the human brain will accelerate AI computations while consuming less energy. - Integration with Quantum Computing:

Quantum deep learning may unlock new frontiers in optimization and problem-solving. - Ethical and Responsible AI:

As AI influences daily life, frameworks for fairness, accountability, and privacy will be crucial. - Human-AI Collaboration:

Future systems will augment human creativity and decision-making rather than replace it.

Conclusion

Deep learning has transformed AI from a theoretical pursuit into a practical and pervasive force shaping our world. Its ability to automatically extract knowledge from massive datasets has led to breakthroughs across industries — from healthcare and transportation to communication and entertainment.

However, with great power comes great responsibility. As deep learning systems grow more autonomous and influential, we must ensure they remain transparent, fair, and aligned with human values.

The journey of deep learning is still in its early stages. With continuous innovations in algorithms, hardware, and data ethics, the technology will continue to push the boundaries of what machines — and humanity — can achieve together.